A few weeks ago, OpenAI launched ChatGPT Agent, a general-purpose task automation tool combining capabilities from OpenAI's Operator and Deep Research. Here are some honest thoughts on my experience with it so far.

What happened

OpenAI trained a new (unnamed) model to power Agent, which has shown strong results on benchmarks like Humanity’s Last Exam. While benchmarks don’t always reflect real-world value, they’re still a useful reference for gauging general competence. From this perspective, Agent is clearly state-of-the-art. The exciting thing about Agent is that it was trained using Reinforcement Learning. Instead of being hard-coded to perform a task using a specific set of instructions, Agent dynamically learned the best way to accomplish a task based on the quality of its outputs. OpenAI will probably collect copious amounts of data on how Agent performs in the wild, and use that to further improve the model. With that in mind, I think these systems will be significantly better in 12 months time.

Benchmark results aside, most everyday users care more about what AI can do for them, not how many questions it answered correctly on a difficult exam (and rightfully so!). Elon Musk made an interesting comment during the Grok 4 release along these lines: “We’re running out of test questions to ask AI. Everything has become trivial. The ultimate reasoning test for AI is going to be reality. Can it build a car? Design a rocket? Discover a new drug?"

While significant progress has been made with multimodal AI and agentic capabilities, we’re not there yet. But the launch of Agent is a meaningful step, from purely conversational AI to active task execution via natural language. I tested it out and wanted to share some early thoughts, practical use cases, and implications I see on the horizon.

ChatGPT Agent Overview

ChatGPT Agent is a computer-using AI that can conduct research, create content (slide decks, spreadsheets), and perform online tasks like booking reservations or shopping. It operates within a cloud-based virtual machine (VM) equipped with a GUI browser, a text browser, a terminal, and more.

Users can choose to let the Agent run autonomously or step in manually when needed (like to submit payment details). Agent also supports integration with ChatGPT connectors, allowing it to interact with user-specific tools and data for personalized tasks.

Implications

The release of Agent signals a continued trend from major LLM labs to become productivity platforms. For everyday AI users, this raises an important question: stick with the main providers who can do everything but only a few things really well, or take a best-of-breed approach using specialized tools for each task. For example, Gamma is a world-class content creation tool, but many users may prefer ChatGPT Agent's ability to create content (albeit not as well), choosing to stick with one AI subscription for simplicity. In the long run, I believe the truly best-in-class point solutions (like Gamma) will prevail, but there will be significant market consolidation as more users commit to a single or handful of AI productivity suites.

Considerations

Where I see Agent really shining is when paired with ChatGPT connectors. I can envision lots of enterprise use cases that query a knowledge base (Google Drive, SharePoint, or Dropbox) and perform tasks specific to the context in those systems. To that end, data quality will become a critical piece of getting those use cases right (garbage in, garbage out), since you don't want Agent using bad data as the source of its outputs. Since OpenAI now supports Model Context Protocol (MCP), the sky is the limit for savvy developers to integrate their IT tools (without pre-built connectors) with a ChatGPT Enterprise plan. Being able to perform tasks using enterprise system data will unlock a lot of new use cases and productivity.

From an enterprise standpoint, a lot of enterprise software vendors will definitely build their own agents, but they'll likely focus on core business processes native to their software. Agent has a unique advantage here, as it's able to perform cross-platform tasks by taking source data from enterprise systems and dynamically creating content or browsing the web.

The continued trend of agentic capabilities could also impact how software is licensed in the future. Instead of provisioning user licenses for every employee in a department, companies might only license a handful of 'human users' for oversight, with many more 'agent' licenses for handling departmental tasks. This is still a ways off, but as agents gain more capabilities, I think we'll see more and more companies adopt this licensing model, both natively for their own agents and externally for third-party agents.

Taking It for a Spin

I tested Agent with a personal task and a professional one.

Personal Use Case

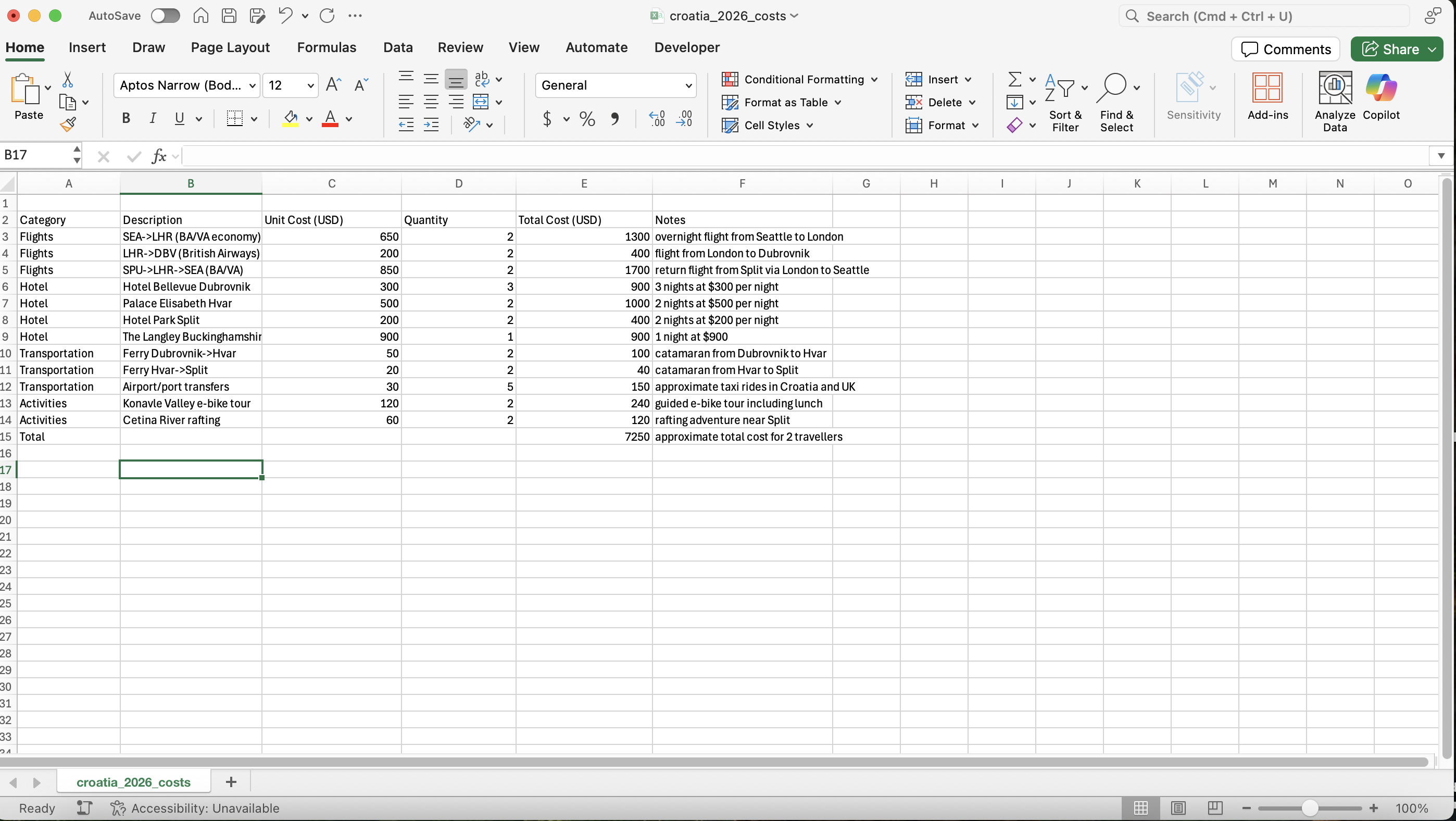

I asked Agent to build a vacation itinerary that accounted for travel windows and food allergies. It delivered a detailed plan, built a budget spreadsheet, and created a PowerPoint presentation to visualize the trip. The whole package was polished and functional.

Professional Use Case

This one combined Deep Research, content creation, and connectors, a very powerful combo for sales, pre-sales, and customer success teams.

I set up a free HubSpot CRM account, enabled the ChatGPT HubSpot connector, and created a fictional deal under my company’s name. I added a contact (myself), set project objectives, budget, and timeline, and then asked Agent to create a PowerPoint presentation (I prompted it with absolutely zero context).

With no additional input, Agent pulled CRM data, supplemented it with external research, and generated a business-ready deck. The results weren’t final, but they were a very solid starting point.

Ideas for Taking It Further

- Integrate pricing data into your CRM and generate proposals in Excel or PowerPoint.

- Draft territory plans or RFP responses.

- Parse incoming RFPs and map requirements to your company’s solutions using documentation from internal knowledge bases.

Final Thoughts

Agent is still early. It's faster than Operator, but still quite slow. As of now, I can see it being a useful starting point for some tasks, but that's about it. When evaluating new AI tools, I think a lot about the opportunity cost of my time. While it's exciting to see agentic capabilities becoming more prominent, the question is whether I value not having to do tasks myself by offloading them to Agent, but having to wait 30-40% longer than if I did those same tasks myself. Right now, I don't feel that Agent's speed is worth the additional wait, but that could change if I discover use cases with faster execution times.

If you're testing, building, or have any powerful use cases you've experimented with, please reach out!